Why didn't we get GPT-2 in 2005?

We probably could have

The ancient Romans were never great at building ships and never tried to explore the Atlantic. The basic reason seems to be—why bother? The open ocean has no resources and is a vast plane of death.

But imagine that in 146 BC after the Romans killed everyone in Carthage, they found a chest in the basement somewhere. The chest was full of gold and labeled “Gold from Gold Island which is in the middle of the Atlantic somewhere and has lots of gold”.

It’s plausible the Romans would have figured out how to build great ships and gone to find that island, right?

Anyway…

GPT-2 probably used around 10²¹ FLOPs.

In 2018, GPT-2 was the start of large language models breaking through into public consciousness as being impressive. We don’t know how many calculations / FLOPs it took to train it, but here are four estimates:

Some internet people claim with no evidence that it took around 2 days to train GPT-2 on 256 TPUv3 chips which if correct would be around 8 × 10²⁰ FLOPs.

The Chinchilla FLOPs formula says training a model with GPT-2’s parameters and training data should take 1.9 × 10²⁰ FLOPs but this assumes a single pass over the data, and GPT-2 used multiple passes.

The Gopher paper trained a model of the same size as GPT-2 but using a 14× larger dataset, and this used around 2.5 × 10²¹ FLOPs.

Sevilla et al. say it took around 2.5 × 10²¹ FLOPs which is cool although I wasn’t able to figure out how they arrived at this number.

Let's call it 10²¹.

BlueGene/L could have trained GPT-2 in a few weeks.

From 2004 to 2008, the most powerful computer in the world was BlueGene/L, located at Lawrence Livermore National Laboratory. It was built by IBM for $290 million. When it was first built it was capable of 7×10¹³ FLOPs/sec. It was expanded to 2.8×10¹⁴ FLOPs/sec by Nov 2005, and later doubled again.

Here’s what it looked like:

To give a sense of progress, you can buy single GPUs today that can almost do that.

Anyway, how long would it have taken for this thing to train GPT-2? That’s just division: 10²¹ FLOPs / (2.8×10¹⁴ FLOPs/sec) = 3,564,000 sec.

That's around 41 days.

So why didn’t it?

There are two obvious answers:

Large language models weren’t invented in 2005. We didn’t know about transformer models, the Adam optimization algorithm, layer normalization tricks, step size cosine annealing, modern tokenizers, and lots of other stuff.

Lawrence Livermore National Laboratory doesn’t care about AI. They care about nuclear weapons.

Those are certainly correct, but they aren’t really answers. If LLMs weren’t invented, why not? If people weren’t building giant supercomputers for language models, why not?

Thought experiment

Say that a few years from now, civilization is destroyed. It takes a millennium for our descendants to crawl out of their bunkers and recreate 2005-era technology. They remember nothing about large language models except this:

With 10²¹ FLOPs you can train statistical models on huge gobs of text that are good enough to be useful for generating text.

(We assume for the purpose of this exercise that AI was not the cause of civilization’s destruction.)

Would people create LLMs using 2005-era compute? Or would they wait for 2018-era compute?

It’s debatable. But I think they’d do it earlier than we did.

So why didn’t we get GPT-2 in 2005?

I think this is why:

How did GPT-2 happen?

Here’s the history as I understand it:

Two decades ago, there were language models, but they weren’t all that great.

As compute got cheaper, people could run more experiments with more compute-hungry models.

Those experiments were promising, so more people started working on the topic.

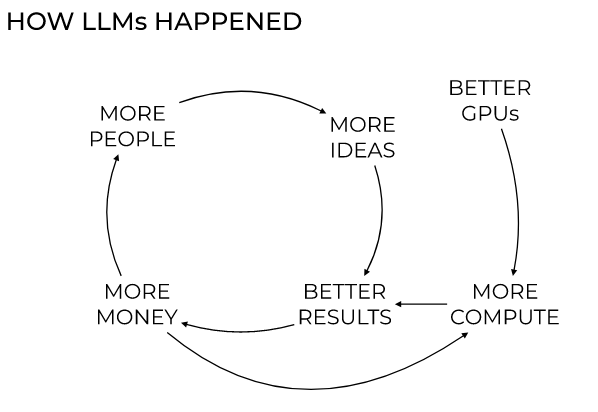

More ideas emerged, which led to better performance, which attracted even more researchers and more investment in compute and things sort of spiraled.

There’s a feedback loop between people, ideas, money, results, and interest. When compute got cheaper, that didn’t just make stuff cheaper, it kicked this whole loop into an accelerating cycle.

Getting to the moon was different.

When the US decided to land on the moon in 1961:

We basically knew what needed to happen to put a person on the moon. At least, we knew the physics of getting a spacecraft there, and what conditions need to be maintained inside of it to keep some humans alive.

We knew that landing on the moon would be really, really, really awesome.

Landing on the moon was a huge accomplishment. But we understood the system well enough to justify making a huge investment and trying to “plot a course” to a distant goal.

LLMs weren’t like that. For language models in 2000:

We didn’t really know how to make them better.

We had no idea what it would cost to build them.

We had no idea how long it would take.

We had no idea how good they might be once built.

There was no grand plan that led to LLMs being created. People just did a bunch of things step-by-step. With so much uncertainty, progress is more “little hops” rather than grand journeys—technology is more “evolved” and less “designed”.

I think this also explains why AI progress has been so fast in the last few years. Around 2018, the fog of uncertainty started to lift. With things like scaling laws the rules of the game have become clearer and it has become possible to make progress in AI in a way that’s more like going to the moon.

Embargoes

Put another way, it’s become more and more clear that Gold Island was out there somewhere.

This is why I’m skeptical of the idea that keeping AI systems’ details secret makes any meaningful contribution to AI safety. Let’s posit that future AI systems could be dangerous and you want to keep them out of the hands of the “wrong people”. Then how much does it help to keep the details secret?

I say not much—the most dangerous thing isn’t the details of the model, the most dangerous thing is the demo.

If you go and demonstrate “I built a system on these broad principles and it produced these amazing capabilities” then you’ve cut the entire feedback loop for everyone else. They know what’s possible, and so will immediately kick their investment into high gear.

That’s not to say it’s impossible. Say you’ve found Gold Island and you want the gold but you don’t want your adversaries to start big ship-building programs. What you could do is promise to sink any ships from anyone else you see exploring the seas. If you have enough of an advantage in shipbuilding (perhaps fueled by all that gold) maybe no one else will bother to try to compete.

In principle, one AI company (or a few?) could build such a lead that it would be pointless for anyone else to try to follow them. This would probably be done by (A) having an enormous tech lead and (B) charging such low prices that it would be impossible for anyone else to enter the market and make any money. Maybe you’d even want to give free access to your adversaries so they have no incentive to develop their own AI systems.

Maybe that could work. But it’s not what’s happening. From what I see, every new demo accelerates investment by everyone else. And so far at least, there’s little technological moat—the secret details of today are the open-source program you can run on your phone tomorrow.

"...the most dangerous thing isn’t the details of the model, the most dangerous thing is the demo."

Great insight. Which is why I think the obsession with "safety" for AI chatbots is useless. The tech is out there, and it's going to be used in ways the AI censors might not approve of.

Anyway, "safety" is a valid concept for nuclear weapons, but not for words spewed out by a machine. No matter how offensive these words may be -- as we have seen with the often humorous examples from ChatGPT, Bing, etc. -- they are not "unsafe" in any objective sense. So with regard to this issue, my rallying cry is: Free speech for AI!

It's not obvious to me that GPT-2 would have had enough training data in 2005. It was trained on 40GB of text scraped from upvoted comments on reddit, over 8 million documents. Could we have done that back then?