Bayes is not a phase

it's forever but weird to argue about

People make fun of techie/rationalist/effective-altruist types for many weird obsessions, like stimulants or meditation or polyamory or psychedelics or seed oils or air quality or re-deriving all of philosophy from scratch. Some of these seem fair to me, or at least understandable.

But the single most common point of mockery is surely the obsession with “Bayesian” reasoning. Many people seem to see this as some screwy hipster fad, some alternate mode of logic that all these weirdos have decided to trust instead of normal human thinking.

This drives me crazy.

Because everyone uses Bayesian reasoning all the time, even if they don’t think of it that way. Arguably, we’re born Bayesian and do it instinctively. It’s normal and natural and—I daresay—almost boring. “Bayesian reasoning” is just a slight formalization of everyday thought.

It’s not a trend. It’s forever. But it’s forever like arithmetic is forever: Strange to be obsessed with it, but really strange to make fun of someone for using it.

Here, I’ll explain what Bayesian reasoning is, why it’s so fundamental, why people argue about it, and why much of that controversy is ultimately a boring semantic debate of no interest to an enlightened person like yourself. Then, for the haters, I’ll give some actually good reasons to skeptical about how useful it is in practice.

I won’t use any equations. That’s not because I don’t think you can take it, but Bayesian reasoning isn’t math. It’s a concept. The typical explanations use lots of math and kind of gesture around the concept, but never seem to get to the core of it, which I think leads people to miss the forest for the trees.

Examples

Let’s get our intuition flowing with a few examples.

Bored one day, you convince a friend to give you an antinuclear antibody test for lupus. (It beats watching TV.) To your shock, the test is positive. After seeing that it’s 90% accurate, you sink into existential terror. But then you remember that only 0.5% of people actually have lupus, so if you gave this test to 2000 random people, you’d get ~199 false positives and only ~9 true positives. Then you feel less scared.

You take a penny and flip it 20 times, getting 16 heads. You plug this into a calculator which tells you that with 95% confidence, the true bias is between 57.6% and 92.9%. This calculator isn’t making a mistake. But still, this was a normal penny. So you’re pretty sure the bias is very close to 50% and you just got 16 heads by chance.

You wonder if artificial superintelligence will be created in the next five years. That sounds weird, so you figure the chance is 1%. But then you notice that AI seems to be better better at a suspicious rate. And you see that lots of expert forecasters give higher numbers. So you raise your estimate to 5%.

You wonder if plants are conscious. You decide there’s a 0.05% chance.

Probably you find some of these situations more objectionable than others. But what’s really happening here?

What is “Bayesian reasoning”?

You may have heard of something called “Bayes’ equation”. Forget it. It’s a distraction. Everyone uses that equation, including people that hate Bayesian reasoning.

The core of Bayesian reasoning is a concept which cannot be translated into math. Here’s how I like to put it:

Mixing aleatoric uncertainty and epistemic uncertainty: Good.

I’m very sorry to define a fancy word using two even-fancier ones. But “aleatoric” and “epistemic” get at something important. Consider these two statements:

My favorite U-235 atom has a 0.0000000985% probability it will decay in the next year.

There’s a 82% probability I’m taller than you.

The word “probability” appears in both these statements. But it means completely different things.

U-235 decays due to random quantum fluctuations. The decay probability has a clear physical meaning. If you get a few quadrillion U-235 atoms together (not too close, please) then after a year, something very close to 0.0000000985% will have decayed. That’s “aleatoric” uncertainty.

Meanwhile, I’m either taller than you or I’m not. You may not know, but it’s a fixed fact about the universe that either is true or isn’t. That 82% probability is a feeling that exists in your brain. That’s “epistemic” uncertainty.

Why people are obsessed with it?

So why would you mix those two types of uncertainty together? And why does Bayesian thinking almost seem like a religion to some people? I think the strongest reason is that it gives optimal decision procedures.

Say you come to dinner at my house. After eating, you were hoping for brandy and cigars, but instead I bring out a jar. Inside it is one gold coin worth $1000 and 4 worthless fake coins. They all look the same, except the fake coins have heads on both sides.

Now, I offer you a deal: If you give me $125, then I’ll pick a random coin and give it to you. Should you accept?

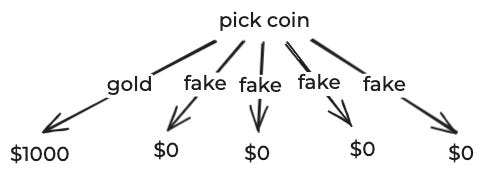

This is pretty trivial, but let’s go through the logic. There are five possible outcomes. In one you get something worth $1000, while in the others, you get something worth $0.

Since each outcome is equally likely, on average you get something worth $200. You should accept my wager. (At least, assuming you have enough liquidity that you’re risk neutral. If you need that $125 to buy food for the next week, don’t gamble it.)

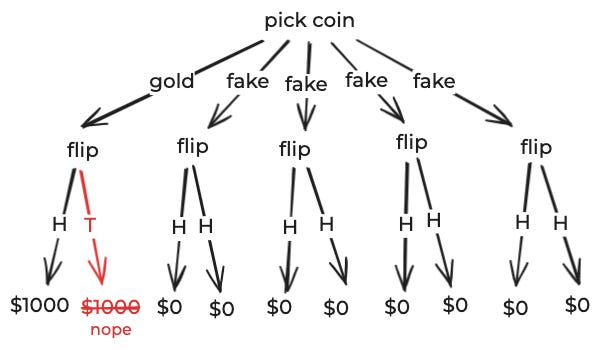

Easy. But say instead I draw a random coin and flip it into the table. It happens to land heads. Then I point to it and ask: Would you like to pay $125 for that coin?

If you’re Bayesian, you’d reason like this: There are five coins, each of which has two sides. So there are 10 equally likely outcomes:

But since you saw heads, one of those outcomes is impossible.

If you start with 10 equally likely outcomes and the rule one out, the other 9 are still equally likely. So on average, in this situation, you’ll get something worth $1000/9 ≈ $111.11. Since that’s less than $125, you should refuse my offer.

That’s the one true correct way to play this game: You calculate the “probability” that the coin is gold and then you use that “probability” to make decisions. Even though the coin on the table is fixed, you act like it’s random if it’s gold or not. If you make decisions in any other way, you’ll lose money over time, either by accepting losing bets, or missing out on winning bets.

And if you’re non-Bayesian, how do you play this game? Exactly the same way.

Well, except that you can’t talk about the “probability” that the coin is gold. So you’ll have to stumble around with “expected utilities” or whatever. But you’d better end up with something equivalent to the probabilistic calculation, because anything else is leaving money on the table.

Why this is controversial

I hope you’re now convinced that it’s useful to act as if non-aleatoric probabilities are “real” probabilities. And that thinking about them as probabilities makes this easier.

Still, I stress that it’s somewhat debatable if the above coin scenario is really “Bayesian”. If it counts at all, it’s certainly the least controversial kind, and not representative of how Bayesian reasoning is typically used in the real world.

In practice, you face a situation where you don’t know how many coins are gold vs. fake. Maybe I bring out the jar and you ask me what fraction of the coins are gold and I vaguely say, “A decent number.” Then you need to stare into my eyes and decide what kind of person you think I am. Alice might decide I’m super chill and estimate that 50% of coins are gold. Bob might decide I’m a huge jerk and only 1% are. That will lead Alice and Bob to completely different “probabilities”.

Here’s a more realistic example. Say you make a new MRNA vaccine for the flu. You test it on a handful of patients and you seem get good results, so you go to investors to try to get money to run a big trial so you can sell it. What’s the “probability” this drug would get FDA approval if funded?

If potential investors are Bayesian, they will mentally weigh the data from your handful of patients with the base rate for how often trials succeed. But what kind of trials should be compared against? All trials? Vaccine trials? Flu drug trials? MRNA trials? Trials in the last 20 years? These lead to different probabilities, and there’s no single right answer.

And it gets much worse than that. I can calculate a “probability” that artificial superintelligence will be invented in five years by making up a “prior” and then adjusting it based on what superforecasters say or how fast AI seems to be improving. But that prior and the adjustments will basically just be things I made up.

So in the real world, Bayesian probabilities are on a spectrum. In some situations, like the gold coin example, they’re very hard to argue with, and being non-Bayesian seems stubborn and pedantic. But in other situations, the “probabilities” that come out are very squishy and subjective. This is why people say they aren’t “real”.

The boring debate

People have argued about Bayesian reasoning for decades. If I calculate that there’s a 4.3% probability I have lupus, is that a “real” probability?

I think a lot of the arguments are ultimately boil down to semantics. You could imagine a world where we used “a-probability” for strict aleatoric uncertainty, and “b-probability” for Bayesian probabilities. Then that debate wouldn’t exist.

That dissolves the debate in the abstract. If we carefully marked everything Bayesian as “b-probabilities”, then we could argue about specific situations. How justifiable are the assumptions that were used? Some “b-probabilities” are much squishier than others.

The argument against using squishy probabilities is obvious: They’re totally subjective.

The argument in favor of squishy probabilities is more subtle. It’s that in life you have to make decisions. You either buy the coin from me or you don’t. You either fund the vaccine trial or you don’t. Making subjective assumptions is uncomfortable, but too bad, life requires hard decisions.

So why be formal about it? Why not just rely on vibes.

Well, while I think we’re all born Bayesian, we’re not great Bayesians. We have all sorts of predictable biases like base rate neglect and anchoring. The way to eliminate these is to state your assumptions formally and reason formally.

Bayesian reasoning is also very legible. If we get different numbers for the probability of AGI in the next five years, we can compare our calculations and maybe learn something.

Why not to be Bayesian

So why not be Bayesian? I think there are two main reasons.

First, outside of very simple situations, Bayesian reasoning requires using slow and unreliable algorithms. In general, Bayesian reasoning is in a complexity class slightly worse than the famous NP-complete class.

Second, outside of very simple situations, creating formal probabilistic models is hard. You need to learn the intricacies of Wishart distributions skew normal distributions and kurtosis. And even if you know those things, creating probabilistic models is incredibly dangerous—if you accidentally set some parameter somewhere incorrectly, you can easily get crazy results.

Compared to the general population, I think I’m comfortably in the top 0.1% in terms of my mastery of Bayesian stuff. (That’s probably true for anyone who’s ever built a non-trivial model for a real problem.)

And yet, here I have a blog where I’ve examined if seed oil is bad for you and if alien aircraft are visiting earth and if it’s a good idea to take statins or use air purifiers or get colonoscopies or eat aspartame or practice gratitude or use an ultrasonic humidifier. And I have used formal Bayesian models never. Why?

The answer is that the real world is messy and creating a formal model that would integrate all the available information would be really, really, really difficult.

If I had an infinite amount of time, I do think that would be the best approach. But I’d be incredibly paranoid that one parameter set incorrectly anywhere could lead to disaster. To create a model that I actually trusted for any of these situations would probably take me months.

Meanwhile, my brain meat has been optimized for millions of years to combine information. It’s very far from optimal, but it usually doesn’t make insane mistakes. And I can still get some of the benefits of Bayesian reasoning by keeping it in mind.

Conclusions

“Aleatoric” probabilities are different from “Bayesian” probabilities.

It’s silly to argue about which is “real”. Just say which one you’re talking about.

Some Bayesian probabilities are much squishier than others. When you see one, make sure you understand what assumptions went into it.

Life involves lots of hard choices with messy information. Theoretically, if you can formalize your assumptions, then Bayesian reasoning is the “optimal” way to make decisions.

But in practice, formalizing assumptions is both hard and dangerous. For most people in most situations, it’s probably safer to use normal human judgement, but keep Bayesian reasoning in mind as a guide.

P.S. The mentoring applications were so unbelievably impressive that I decided to pick winners randomly. I tried very hard to email everyone who applied but kept getting blocked as spam, even when trying to send out notifications in small batches. (What a web we weave.) So I’m very sorry if you didn’t get my email. I read every application and I was humbled by the amazing things you are all doing. I wish I could have accepted everyone.

>find new word "stochastic"

>use context clues to piece together meaning

>confirm with google

>means random? Why not just say random?

>years pass

>find new word "aleatoric"

:P

Look, if you define everything from pure frequentism (i.e. only aleatoric uncertainty exists) to full Bayesian as Bayesian, then of course you can't escape being Bayesian. You've defined everything as Bayesian, even the opposite. Your coin example is 100% aleatoric uncertainty, for example.

There are plenty of ways to make decisions in the presence of epistemic uncertainty without resorting to Bayesianism. You can use heuristics (e.g. when in doubt, take the safe bet -- don't bet on the coin). You can perform rule-based reasoning (e.g. if someone takes out one coin and asks you to bet on it, always refuse).

Sure, these methods may be strictly worse than Bayesian decision making, in the sense that they have poorer expected value, but people don't have to optimize for expected value. If anything, that's what defines Bayesianism to me: the belief that "winning bets", maximizing expected value, is the sine qua non of life and decision making. It's not.